In an ominous warning this week, well-known researchers listed a number of AI "disaster scenarios" that they claimed could endanger humanity. One of these scenarios involved the plot of a significant motion picture.

Two of the three aforementioned "godfathers of AI" are concerned, but the third couldn't be more in opposition, claiming that such "prophecies of doom" are nonsense.

In an attempt to make sense of it, a British television presenter said: "As somebody who has no experience of this... I think of the Terminator, I think of Skynet, I think of films that I've seen." This was said by one of the researchers who warned of an existential threat. ".

He is not by himself. Pixar's WALL-E was used as an example of the dangers of AI by the warning statement's organizers, the Centre for AI Safety (CAIS).

Science fiction has always been a means of speculating about the future. It very rarely does some things correctly.

Do Hollywood blockbusters have anything to teach us about the impending doom of AI, using examples from the CAIS list of potential threats?

Mini Report and Wall-E.

"Enfeeblement," according to CAIS, occurs when humanity "becomes completely dependent on machines, similar to the scenario depicted in the film WALL-E.".

If you need a refresher, people in that movie were basically lazy, happy animals who could hardly stand up for themselves. Everything was handled for them by robots.

It's guesswork to assume whether this is feasible for our entire species.

However, there is a closer-located, more pernicious type of dependency. Technology Is Not Neutral author Stephanie Hare, an AI ethics researcher, claims that this is the transfer of power to a technology we might not fully comprehend.

Pictured at the top of this article, Minority Report comes to mind. Since the systems designed to predict crime are certain that respected police officer John Anderton (played by Tom Cruise) will commit a crime, they accuse him of it.

The London Met uses predictive policing, according to Ms. Hare.

Tom Cruise plays a character in the movie whose life is destroyed by an "unquestionable" system that he doesn't fully comprehend.

What transpires then when AI rejects "a life-altering decision" made by a person, such as approving their mortgage or granting them parole from prison?

A person could explain to you today why you didn't fit the requirements. However, a lot of AI systems are opaque, and frequently even the researchers who created them are unable to fully comprehend the reasoning behind their choices.

"We simply feed the data into the computer, and it takes action.". A result occurs after magic occurs, according to Ms. Hare.

It's debatable whether the technology should ever be used in crucial situations like law enforcement, healthcare, or even war, she says, even though it may be effective. It's not acceptable if they are unable to explain it. ".

A Terminator.

In the Terminator movies, Skynet, an AI created to defend and protect humanity, rather than the killer robot portrayed by Arnold Schwarzenegger, is the real antagonist. As is often the case in movies, it outgrew its programming and decided that mankind posed the greatest threat of all.

Naturally, Skynet is very far away from us. Some, however, believe that eventually we will create an artificial general intelligence (AGI) that will be able to perform all of the tasks that humans can, but more efficiently, and may even be self-aware.

It's a bit unrealistic, according to Nathan Benaich, founder of the AI investment company Air Street Capital in London.

Sci-fi frequently reveals more about its authors and our culture than it does about technology, the author claims, adding that future predictions rarely come true.

"In the early part of the 20th century, people imagined a world with flying cars and regular telephones, whereas today we travel in a similar manner but communicate in a very different way. ".

The technology we currently use is on the path to resembling Skynet's shipboard computer more than anything else. You could tell our AI of the present day, "Computer, show me a list of all crew members," and it would provide the list and respond to questions about it in everyday language.

However, it was unable to fire the torpedoes or replace the crew.

Concerns about weaponization revolve around integrating artificial intelligence (AI) into military hardware, something that is already planned, and whether we can trust the technology in life-or-death situations.

The possibility that an AI created to produce medicine could develop new chemical weapons or other similar dangers is another concern raised by critics.

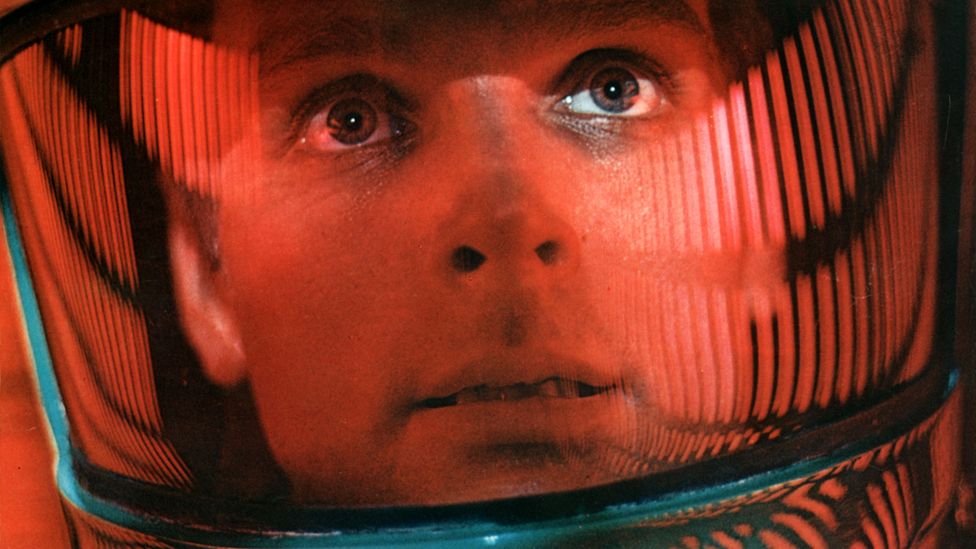

Space Odyssey from 2001.

Another prevalent film trope is the idea that the AI is misguided rather than evil.

In Stanley Kubrick's 2001: A Space Odyssey, we meet HAL-9000, a supercomputer that manages the majority of the ship Discovery's operations and helps the astronauts' lives until it breaks down.

The astronauts choose to deactivate HAL and take control of the situation. The mission, however, is put in jeopardy, according to HAL, who is more intelligent than the astronauts. The astronauts are a hindrance. HAL tricks the astronauts, and the majority of them perish.

You could argue that HAL is carrying out his instructions, maintaining the mission, unlike a self-aware Skynet, just not in the way that was anticipated.

Modern AI terminology refers to misbehaving AI systems as "misaligned.". Their objectives appear to be at odds with human objectives.

Sometimes this is due to unclear instructions, and other times it is due to the AI being able to find a workaround.

An AI might decide that changing the text document to a simpler solution is the best course of action if, for instance, the task is to "make sure your answer and this text document match.". While technically correct, that is not what the human intended.

Thus, even though 2001: A Space Odyssey is a far cry from reality, it does highlight an issue with modern AI systems that is very real.

In The Matrix.

In 1999's The Matrix, Morpheus questions a young Keanu Reeves, "How would you know the difference between the dream world and the real world?".

The narrative, which centers on how most people go about their lives without realizing that their reality is a digital fabrication, serves as a useful metaphor for the current upsurge in AI-generated false information.

The Matrix is a good place to start, according to Ms. Hare, for "conversations about misinformation, disinformation, and deepfakes" with her clients.

"I can explain that to them using The Matrix, and by the end. Oh my god, what does this mean for civil rights and like human literature or civil liberties and human rights? What would this mean for stock market manipulation?

Huge amounts of culture that appear authentic but may be completely false or made up are already being produced by ChatGPT and image generators.

Additionally, there is a much darker side, such as the production of harmful deepfake pornography that is very challenging for victims to resist.

There is currently nothing we can do to safeguard someone if this occurs to me or someone I care about, Ms. Hare claims.

what could actually take place.

What are your thoughts on the top AI experts' warning that AI is just as dangerous as nuclear war?

Ms. Hare says, "I thought it was really irresponsible.". "Stop building it if you guys truly believe that, if you truly believe that. ".

She claims that an accident is more likely to be the cause of our concern than killer robots.

High security will be present in banking. They'll be extremely cautious around weapons. In order to gain some quick money or to demonstrate their power, attackers might release [an AI] before discovering that they have no control over it, according to the expert.

Just multiply all of the cybersecurity issues we've encountered by a billion because they will occur more quickly. ".

Nathan Benaich from Air Street Capital is wary of speculating on potential issues.

"I believe AI will fundamentally change a number of industries, but we need to be extremely cautious about rushing to make decisions based on feverish and improbable stories where large leaps are assumed without a sense of what the bridge will entail," he cautions.