In a video that surfaced in March of last year, President Volodymyr Zelensky was heard ordering the Ukrainian people to surrender to Russia and lay down their arms.

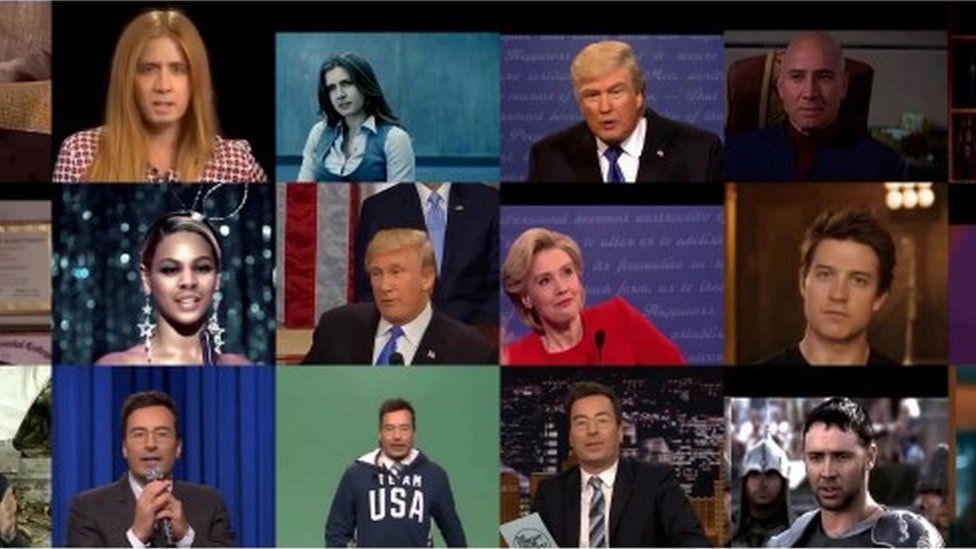

It was pretty clear that the video was a deepfake, which is a type of fake that uses artificial intelligence to swap faces or make a digital person.

But as AI advances make deepfakes easier to produce, it is now more crucial than ever to spot them.

The solution, according to Intel, is all about getting blood in your face.

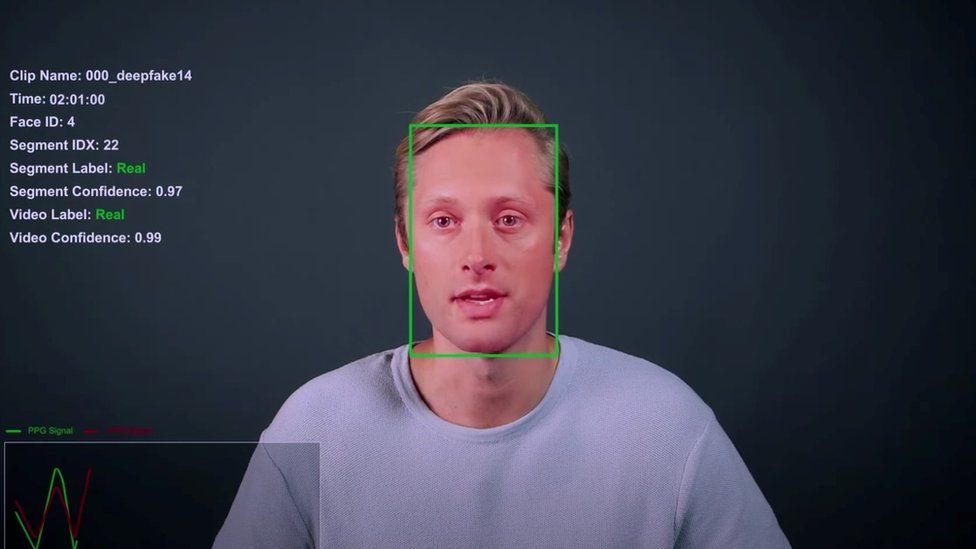

The system is known by the company as "FakeCatcher.".

Ilke Demir, a research scientist at Intel Labs, explains how it operates to us in Intel's luxurious, largely empty offices in Silicon Valley.

What is real about us, what is real about authentic videos, and what is the watermark of being human, she says?

A method known as Photoplethysmography (PPG), which recognizes variations in blood flow, is at the heart of the system.

These signals are not given off by deepfake faces, she claims.

Additionally, the system examines eye movement to verify authenticity.

"Since humans typically look at a point, when I look at you, it appears as though I'm beaming light at you from my eyes. But for deepfakes, they are divergent, like googly eyes, she claims.

Intel claims that by examining both of these characteristics, it can quickly determine whether a video is authentic or fake.

FakeCatcher, according to the company, is 96% accurate. So, we made a request to test the system. Intel concurred.

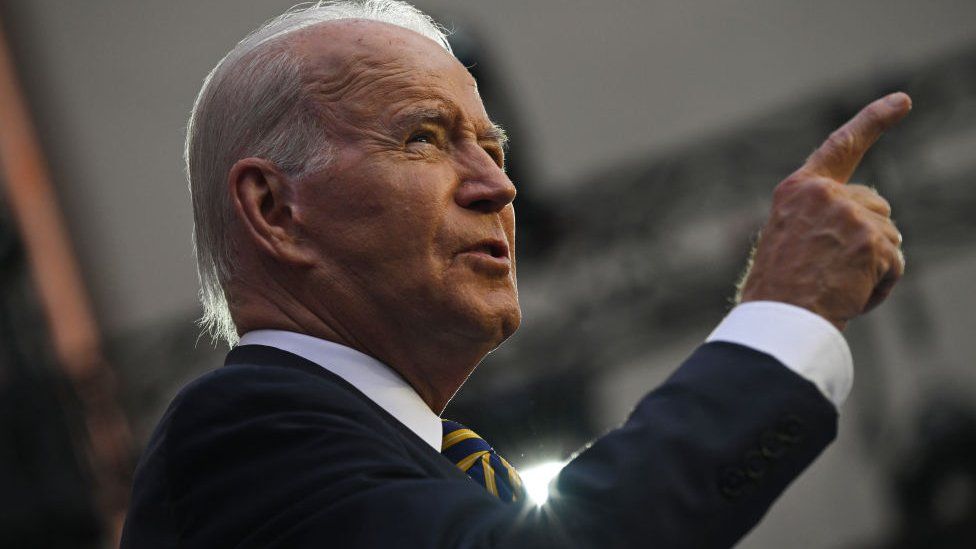

About a dozen videos of former US President Donald Trump and current US President Joe Biden were used.

Some were deep fakes produced by the Massachusetts Institute of Technology (MIT), while others were genuine.

The system seemed to be fairly effective at detecting deepfakes.

Most of the fakes we used were lip-synced versions of real videos with changed mouth and voice.

And it got every answer right, bar one.

However, it started to have issues once we started using real, authentic videos.

The system incorrectly identified fake videos on several occasions when they were real.

It is more difficult to detect blood flow in videos that have more pixelation.

Additionally, the system does not analyze audio. As a result, some videos that were fairly clearly identifiable as real by listening to the voice were labeled as fake.

The worry is that if the program says a video is fake, when it's genuine, it could cause real problems.

When we make this point to Ms Demir, she says that "verifying something as fake, versus 'be careful, this may be fake' is weighted differently".

She claims that the system is acting too cautiously.

Deepfakes can be incredibly subtle: a two second clip in a political campaign advert, for example. They can also be of low quality. A fake can be made by only changing the voice.

In this respect, the ability for FaceCatcher to work "in the wild" - in real world contexts - has been questioned.

Matt Groh is an assistant professor at Northwestern University in Illinois, and a deepfakes expert.

"I don't doubt the stats that they listed in their initial evaluation," he says. "But what I do doubt is whether the stats are relevant to real world contexts. ".

This is where it gets difficult to evaluate FakeCatcher's tech.

Programmes like facial-recognition systems will often give extremely generous statistics for their accuracy.

However, when actually tested in the real world they can be less accurate.

Earlier this year the BBC tested Clearview AI's facial recognition system, using our own pictures. Although the power of the tech was impressive, it was also clear that the more pixelated the picture, and the more side-on the the face in the photo was, the harder it was for the programme to successfully identify someone.

In essence, the accuracy is entirely dependent on the difficulty of the test.

Intel claims that FakeCatcher has gone through rigorous testing. This includes a "wild" test - in which the company has put together 140 fake videos - and their real counterparts.

In this test the system had a success rate of 91 percent, Intel says.

However, Matt Groh and other researchers want to see the system independently analysed. They do not think it's good enough that Intel is setting a test for itself.

"I would love to evaluate these systems," Mr Groh says.

"I think it's really important when we're designing audits and trying to understand how accurate something is in a real world context," he says.

It is surprising how difficult it can be tell a fake and a real video apart - and this technology certainly has potential.

But from our limited tests, it has a way to go yet.

Watch the full report on this week's episode of Click.

. Better to catch all the fakes - and catch some real videos too - than miss fakes